Each model will be parallelised using 16 OpenMP threads. In this example 10 models will be run one after another on a single node of the cluster (on this particular cluster a single node has 16 cores/threads available). # Set number of OpenMP threads for each gprMax model export OMP_NUM_THREADS = 16 # Run gprMax with input file cd $HOME/gprMax # Load and activate Anaconda environment for gprMax, i.e. #!/bin/sh # Change to current working directory: #$ -cwd # Specify runtime (hh:mm:ss): #$ -l h_rt=01:00:00 # Email options: #$ -m ea -M # Parallel environment ($NSLOTS): #$ -pe sharedmem 16 # Job script name: #$ -N gprmax_omp.sh # Initialise environment module There is therefore an alternate MPI task farm implementation that does not use the MPI spawn mechanism, and is activated using the -mpi-no-spawn command line option. This is sometimes not supported or properly configured on HPC systems. Our default MPI task farm implementation (activated using the -mpi command line option) makes use of the MPI spawn mechanism. to create a B-scan with 60 traces and use MPI to farm out each trace: (gprMax)$ python -m gprMax user_models/cylinder_Bscan_2D.in -n 60 -mpi 61. This option is most usefully combined with -n to allow individual models to be farmed out using a MPI task farm, e.g. It can be used with the -mpi command line option, which specifies the total number of MPI tasks, i.e. Overall this creates what is know as a mixed mode OpenMP/MPI job.īy default the MPI task farm functionality is turned off. Within each independent model OpenMP threading will continue to be used (as described above). Each A-scan can be task-farmed as a independent model. This can be useful in many GPR simulations where a B-scan (composed of multiple A-scans) is required.

#Install openmp and openmpi series#

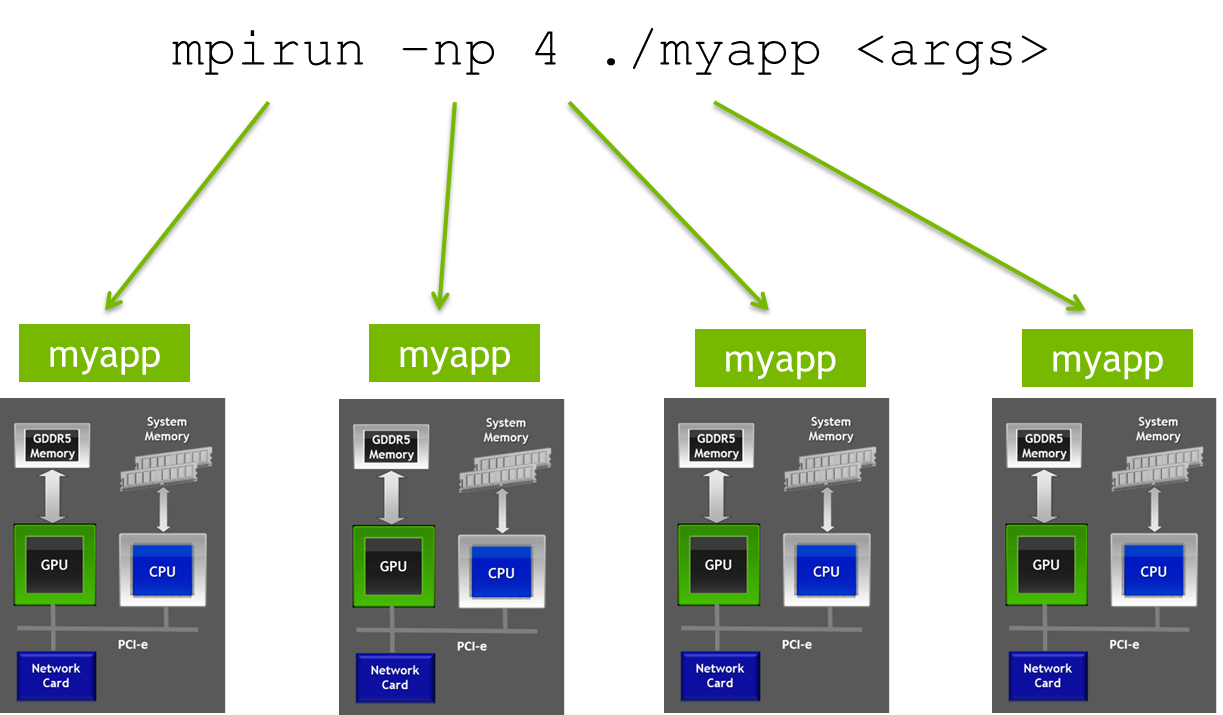

The Message Passing Interface (MPI) has been utilised to implement a simple task farm that can be used to distribute a series of models as independent tasks.

0 kommentar(er)

0 kommentar(er)